What I Wish I Knew When Learning Haskell

Version 2.5

% What I Wish I Knew When Learning Haskell (Version 2.5) % Stephen Diehl % February 2020

Version

This is the fifth major draft of this document since 2009.

Pull requests are always accepted for changes and additional content. This is a living document. The only way this document will stay up to date is through the kindness of readers like you and community patches and pull requests on Github.

If you’d like a physical copy of the text you can either print it for yourself (see Printable PDF) or purchase one online:

Author

This text is authored by Stephen Diehl.

- Web: https://www.stephendiehl.com

- Twitter: https://twitter.com/smdiehl

- Github: https://github.com/sdiehl

Special thanks for Erik Aker for copyediting assistance.

License

Copyright © 2009-2020 Stephen Diehl

This code included in the text is dedicated to the public domain. You can copy, modify, distribute and perform the code, even for commercial purposes, all without asking permission.

You may distribute this text in its full form freely, but may not reauthor or sublicense this work. Any reproductions of major portions of the text must include attribution.

The software is provided “as is”, without warranty of any kind, express or implied, including But not limited to the warranties of merchantability, fitness for a particular purpose and noninfringement. In no event shall the authors or copyright holders be liable for any claim, damages or other liability, whether in an action of contract, tort or otherwise, Arising from, out of or in connection with the software or the use or other dealings in the software.

Basics

What is Haskell?

At its heart Haskell is a lazy, functional, statically-typed programming language with advanced type system features such as higher-rank, higher-kinded parametric polymorphism, monadic effects, generalized algebraic data types, ad-hoc polymorphism through type classes, associated type families, and more. As a programming language, Haskell pushes the frontiers of programming language design more so than any other general purpose language while still remaining practical for everyday use.

Beyond language features, Haskell remains an organic, community-driven effort, run by its userbase instead of by corporate influences. While there are some Haskell companies and consultancies, most are fairly small and none have an outsized influence on the development of the language. This is in stark contrast to ecosystems like Java and Go where Oracle and Google dominate all development. In fact, the Haskell community is a synthesis between multiple disciplines of academic computer science and industrial users from large and small firms, all of whom contribute back to the language ecosystem.

Originally, Haskell was borne out of academic research. Designed as an ML dialect, it was initially inspired by an older language called Miranda. In the early 90s, a group of academics formed the GHC committee to pursue building a research vehicle for lazy programming languages as a replacement for Miranda. This was a particularly in-vogue research topic at the time and as a result the committee attracted various talented individuals who initiated the language and ultimately laid the foundation for modern Haskell.

Over the last 30 years Haskell has evolved into a mature ecosystem, with an equally mature compiler. Even so, the language is frequently reimagined by passionate contributors who may be furthering academic research goals or merely contributing out of personal interest. Although laziness was originally the major research goal, this has largely become a quirky artifact that most users of the language are generally uninterested in. In modern times the major themes of Haskell community are:

- A vehicle for type system research

- Experimentation in the design space of typed effect systems

- Algebraic structures as a method of program synthesis

- Referential transparency as a core language feature

- Embedded domain specific languages

- Experimentation toward practical dependent types

- Stronger encoding of invariants through type-level programming

- Efficient functional compiler design

- Alternative models of parallel and concurrent programming

Although these are the major research goals, Haskell is still a fully general purpose language, and it has been applied in wildly diverse settings from garbage trucks to cryptanalysis for the defense sector and everything in-between. With a thriving ecosystem of industrial applications in web development, compiler design, machine learning, financial services, FPGA development, algorithmic trading, numerical computing, cryptography research, and cybersecurity, the language has a lot to offer to any field or software practitioner.

Haskell as an ecosystem is one that is purely organic, it takes decades to evolve, makes mistakes and is not driven by any one ideology or belief about the purpose of functional programming. This makes Haskell programming simultaneously frustrating and exciting; and therein lies the fun that has been the intellectual siren song that has drawn many talented programmers to dabble in this beautiful language at some point in their lives.

See:

How to Read

This is a guide for working software engineers who have an interest in Haskell but don’t know Haskell yet. I presume you know some basics about how your operating system works, the shell, and some fundamentals of other imperative programming languages. If you are a Python or Java software engineer with no Haskell experience, this is the executive summary of Haskell theory and practice for you. We’ll delve into a little theory as needed to explain concepts but no more than necessary. If you’re looking for a purely introductory tutorial, this probably isn’t the right start for you, however this can be read as a companion to other introductory texts.

There is no particular order to this guide, other than the first chapter which describes how to get set up with Haskell and use the foundational compiler and editor tooling. After that you are free to browse the chapters in any order. Most are divided into several sections which outline different concepts, language features or libraries. However, the general arc of this guide bends toward more complex topics in later chapters.

As there is no ordering after the first chapter I will refer to concepts globally without introducing them first. If something doesn’t make sense just skip it and move on. I strongly encourage you to play around with the source code modules for yourself. Haskell cannot be learned from an armchair; instead it can only be mastered by writing a ton of code for yourself. GHC may initially seem like a cruel instructor, but in time most people grow to see it as their friend.

GHC

GHC is the Glorious Glasgow Haskell Compiler. Originally written in 1989, GHC is now the de facto standard for Haskell compilers. A few other compilers have existed along the way, but they either are quite limited or have bit rotted over the years. At this point, GHC is a massive compiler and it supports a wide variety of extensions. It’s also the only reference implementation for the Haskell language and as such, it defines what Haskell the language is by its implementation.

GHC is run at the command line with the command ghc.

$ ghc --version

The Glorious Glasgow Haskell Compilation System, version 8.8.1$ ghc Example.hs -o example

$ ghc --make Example.hsGHC’s runtime is written in C and uses machinery from GCC infrastructure for its native code generator and can also use LLVM for its native code generation. GHC is supported on the following architectures:

- Linux x86

- Linux x86_64

- Linux PowerPC

- NetBSD x86

- OpenBSD x86

- FreeBSD x86

- MacOS X Intel

- MacOS X PowerPC

- Windows x86_64

GHC itself depends on the following Linux packages.

- build-essential

- libgmp-dev

- libffi-dev

- libncurses-dev

- libtinfo5

ghcup

There are two major packages that need to be installed to use Haskell:

- ghc

- cabal-install

GHC can be installed on Linux and Mac with ghcup by running the following command:

$ curl --proto '=https' --tlsv1.2 -sSf https://get-ghcup.haskell.org | shTo start the interactive user interface, run:

$ ghcup tuiAlternatively, to use the cli to install multiple versions of GHC,

use the install command.

$ ghcup install ghc 8.6.5

$ ghcup install ghc 8.4.4To select which version of GHC is available on the PATH use the

set command.

$ ghcup set ghc 8.8.1This can also be used to install cabal.

$ ghcup install cabalTo modify your shell to include ghc and cabal.

$ source ~/.ghcup/envOr you can permanently add the following to your .bashrc

or .zshrc file:

export PATH="~/.ghcup/bin:$PATH"Package Managers

There are two major Haskell packaging tools: Cabal and Stack. Both take differing views on versioning schemes but can more or less interoperate at the package level. So, why are there two different package managers?

The simplest explanation is that Haskell is an organic ecosystem with no central authority, and as such different groups of people with different ideas and different economic interests about optimal packaging built their own solutions around two different models. The interests of an organic community don’t always result in open source convergence; however, the ecosystem has seen both package managers reach much greater levels of stability as a result of collaboration. In this article, I won’t offer a preference for which system to use: it is left up to the reader to experiment and use the system which best suits your or your company’s needs.

Project Structure

A typical Haskell project hosted on Github or Gitlab will have several executable, test and library components across several subdirectories. Each of these files will correspond to an entry in the Cabal file.

.

├── app # Executable entry-point

│ └── Main.hs # main-is file

├── src # Library entry-point

│ └── Lib.hs # Exposed module

├── test # Test entry-point

│ └── Spec.hs # Main-is file

├── ChangeLog.md # extra-source-files

├── LICENSE # extra-source-files

├── README.md # extra-source-files

├── package.yaml # hpack configuration

├── Setup.hs

├── simple.cabal # cabal configuration generated from hpack

├── stack.yaml # stack configuration

├── .hlint.yaml # hlint configuration

└── .ghci # ghci configurationMore complex projects consisting of multiple modules will include

multiple project directories like those above, but these will be nested

in subfolders with a cabal.project or

stack.yaml in the root of the repository.

.

├── lib-one # component1

├── lib-two # component2

├── lib-three # component3

├── stack.yaml # stack project configuration

└── cabal.project # cabal project configurationAn example Cabal project file, named cabal.project

above, this multi-component library repository would include these

lines.

packages: ./lib-one

./lib-two

./lib-threeBy contrast, an example Stack project stack.yaml for the

above multi-component library repository would be:

resolver: lts-14.20

packages:

- 'lib-one'

- 'lib-two'

- 'lib-three'

extra-package-dbs: []Cabal

Cabal is the build system for Haskell. Cabal is also the standard build tool for Haskell source supported by GHC. Cabal can be used simultaneously with Stack or standalone with cabal new-build.

To update the package index from Hackage, run:

$ cabal updateTo start a new Haskell project, run:

$ cabal init

$ cabal configureThis will result in a .cabal file being created with the

configuration options for our new project.

Cabal can also build dependencies in parallel by passing

-j<n> where n is the number of

concurrent builds.

$ cabal install -j4 --only-dependenciesLet’s look at an example .cabal file. There are two main

entry points that any package may provide: a library and an

executable. Multiple executables can be defined, but only

one library. In addition, there is a special form of executable entry

point Test-Suite, which defines an interface for invoking

unit tests from cabal.

For a library, the exposed-modules

field in the .cabal file indicates which modules within the

package structure will be publicly visible when the package is

installed. These modules are the user-facing APIs that we wish to expose

to downstream consumers.

For an executable, the main-is field

indicates the module that exports the main function

responsible for running the executable logic of the application. Every

module in the package must be listed in one of

other-modules, exposed-modules or

main-is fields.

name: mylibrary

version: 0.1

cabal-version: >= 1.10

author: Paul Atreides

license: MIT

license-file: LICENSE

synopsis: My example library.

category: Math

tested-with: GHC

build-type: Simple

library

exposed-modules:

Library.ExampleModule1

Library.ExampleModule2

build-depends:

base >= 4 && < 5

default-language: Haskell2010

ghc-options: -O2 -Wall -fwarn-tabs

executable "example"

build-depends:

base >= 4 && < 5,

mylibrary == 0.1

default-language: Haskell2010

main-is: Main.hs

Test-Suite test

type: exitcode-stdio-1.0

main-is: Test.hs

default-language: Haskell2010

build-depends:

base >= 4 && < 5,

mylibrary == 0.1To run an “executable” under cabal execute the

command:

$ cabal run

$ cabal run <name> # when there are several executables in a projectTo load the “library” into a GHCi shell under

cabal execute the command:

$ cabal repl

$ cabal repl <name>The <name> metavariable is either one of the

executable or library declarations in the .cabal file and

can optionally be disambiguated by the prefix

exe:<name> or lib:<name>

respectively.

To build the package locally into the ./dist/build

folder, execute the build command:

$ cabal buildTo run the tests, our package must itself be reconfigured with the

--enable-tests flag and the build-depends

options. The Test-Suite must be installed manually, if not

already present.

$ cabal install --only-dependencies --enable-tests

$ cabal configure --enable-tests

$ cabal test

$ cabal test <name>Moreover, arbitrary shell commands can be invoked with the GHC environmental variables. It

is quite common to run a new bash shell with this command such that the

ghc and ghci commands use the package

environment. This can also run any system executable with the

GHC_PACKAGE_PATH variable set to the libraries package database.

$ cabal exec

$ cabal exec bashThe haddock documentation can be generated for

the local project by executing the haddock command. The

documentation will be built to the ./dist folder.

$ cabal haddockWhen we’re finally ready to upload to Hackage ( presuming we have a Hackage account set up ), then we can build the tarball and upload with the following commands:

$ cabal sdist

$ cabal upload dist/mylibrary-0.1.tar.gzThe current state of a local build can be frozen with all current package constraints enumerated:

$ cabal freezeThis will create a file cabal.config with the constraint

set.

constraints: mtl ==2.2.1,

text ==1.1.1.3,

transformers ==0.4.1.0The cabal configuration is stored in

$HOME/.cabal/config and contains various options including

credential information for Hackage upload.

A library can also be compiled with runtime profiling information enabled. More on this is discussed in the section on Concurrency and Profiling.

library-profiling: TrueAnother common flag to enable is documentation which

forces the local build of Haddock documentation, which can be useful for

offline reference. On a Linux filesystem these are built to the

/usr/share/doc/ghc-doc/html/libraries/ directory.

documentation: TrueCabal can also be used to install packages globally to the system

PATH. For example the parsec package to your

system from Hackage, the upstream source of

Haskell packages, invoke the install command:

$ cabal install parsec --installdir=~/.local/bin # latest versionTo download the source for a package, we can use the get

command to retrieve the source from Hackage.

$ cabal get parsec # fetch source

$ cd parsec-3.1.5

$ cabal configure

$ cabal build

$ cabal installCabal New-Build

The interface for Cabal has seen an overhaul in the last few years and has moved more closely towards the Nix-style of local builds. Under the new system packages are separated into categories:

- Local Packages - Packages are built from a configuration file which specifies a path to a directory with a cabal file. These can be working projects as well as all of its local transitive dependencies.

- External Packages - External packages are packages

retrieved from either the public Hackage or a private Hackage

repository. These packages are hashed and stored locally in

~/.cabal/storeto be reused across builds.

As of Cabal 3.0 the new-build commands are the default operations for

build operations. So if you type cabal build using Cabal

3.0 you are already using the new-build system.

Historically these commands were separated into two different command

namespaces with prefixes v1- and v2-, with v1

indicating the old sandbox build system and the v2 indicating the

new-build system.

The new build commands are listed below:

new-build Compile targets within the project.

new-configure Add extra project configuration

new-repl Open an interactive session for the given component.

new-run Run an executable.

new-test Run test-suites

new-bench Run benchmarks

new-freeze Freeze dependencies.

new-haddock Build Haddock documentation

new-exec Give a command access to the store.

new-update Updates list of known packages.

new-install Install packages.

new-clean Clean the package store and remove temporary files.

new-sdist Generate a source distribution file (.tar.gz).Cabal also stores all of its build artifacts inside of a

dist-newstyle folder stored in the project working

directory. The compilation artifacts are of several categories.

.hi- Haskell interface modules which describe the type information, public exports, symbol table, and other module guts of compiled Haskell modules..hie- An extended interface file containing module symbol data..hspp- A Haskell preprocessor file..o- Compiled object files for each module. These are emitted by the native code generator assembler..s- Assembly language source file..bc- Compiled LLVM bytecode file..ll- An LLVM source file.cabal_macros.h- Contains all of the preprocessor definitions that are accessible when using the CPP extension.cache- Contains all artifacts generated by solving the constraints of packages to set up a build plan. This also contains the hash repository of all external packages.packagedb- Database of all of the cabal metadata of all external and local packages needed to build the project. See Package Databases.

These all get stored under the dist-newstyle folder

structure which is set up hierarchically under the specific CPU

architecture, GHC compiler version and finally the package version.

dist-newstyle

├── build

│ └── x86_64-linux

│ └── ghc-8.6.5

│ └── mypackage-0.1.0

│ ├── build

│ │ ├── autogen

│ │ │ ├── cabal_macros.h

│ │ │ └── Paths_mypackage.hs

│ │ ├── libHSmypackage-0.1.0-inplace.a

│ │ ├── libHSmypackage-0.1.0-inplace-ghc8.6.5.so

│ │ ├── MyPackage

│ │ │ ├── Example.dyn_hi

│ │ │ ├── Example.dyn_o

│ │ │ ├── Example.hi

│ │ │ ├── Example.o

│ │ ├── MyPackage.dyn_hi

│ │ ├── MyPackage.dyn_o

│ │ ├── MyPackage.hi

│ │ └── MyPackage.o

│ ├── cache

│ │ ├── build

│ │ ├── config

│ │ └── registration

│ ├── package.conf.inplace

│ │ ├── package.cache

│ │ └── package.cache.lock

│ └── setup-config

├── cache

│ ├── compiler

│ ├── config

│ ├── elaborated-plan

│ ├── improved-plan

│ ├── plan.json

│ ├── solver-plan

│ ├── source-hashes

│ └── up-to-date

├── packagedb

│ └── ghc-8.6.5

│ ├── package.cache

│ ├── package.cache.lock

│ └── mypackage-0.1.0-inplace.conf

└── tmpLocal Packages

Both Stack and Cabal can handle local packages built from the local filesystem, from remote tarballs, or from remote Git repositories.

Inside of the stack.yaml simply specify the git

repository remote and the hash to pull.

resolver: lts-14.20

packages:

# From Git

- git: https://github.com/sdiehl/protolude.git

commit: f5c2bf64b147716472b039d30652846069f2fc70In Cabal to add a remote create a cabal.project file and

add your remote in the source-repository-package

section.

packages: .

source-repository-package

type: git

location: https://github.com/hvr/HsYAML.git

tag: e70cf0c171c9a586b62b3f75d72f1591e4e6aaa1Version Bounds

All Haskell packages are versioned and the numerical quantities in the version are supposed to follow the Package Versioning Policy.

As packages evolve over time there are three numbers which monotonically increase depending on what has changed in the package.

- Major version number

- Minor version number

- Patch version number

-- PVP summary: +-+------- breaking API changes

-- | | +----- non-breaking API additions

-- | | | +--- code changes with no API change

version: 0.1.0.0Every library’s cabal file will have a packages dependencies section

which will specify the external packages which the library depends on.

It will also contain the allowed versions that it is known to build

against in the build-depends section. The convention is to

put the upper bound to the next major unreleased version and the lower

bound at the currently used version.

build-depends:

base >= 4.6 && <4.14,

async >= 2.0 && <2.3,

deepseq >= 1.3 && <1.5,

containers >= 0.5 && <0.7,

hashable >= 1.2 && <1.4,

transformers >= 0.2 && <0.6,

text >= 1.2 && <1.3,

bytestring >= 0.10 && <0.11,

mtl >= 2.1 && <2.3Individual lines in the version specification can be dependent on other variables in the cabal file.

if !impl(ghc >= 8.0)

Build-Depends: fail >= 4.9 && <4.10Version bounds in cabal files can be managed automatically with a

tool cabal-bounds which can automatically generate, update

and format cabal files.

$ cabal-bounds updateSee:

Stack

Stack is an alternative approach to Haskell’s package structure that emerged in 2015. Instead of using a rolling build like Cabal, Stack breaks up sets of packages into release blocks that guarantee internal compatibility between sets of packages. The package solver for Stack uses a different strategy for resolving dependencies than cabal-install has historically used and Stack combines this with a centralised build server called Stackage which continuously tests the set of packages in a resolver to ensure they build against each other.

Install

To install stack on Linux or Mac, run:

curl -sSL https://get.haskellstack.org/ | shFor other operating systems, see the official install directions.

Usage

Once stack is installed, it is possible to setup a build

environment on top of your existing project’s cabal file by

running:

stack initAn example stack.yaml file for GHC 8.8.1 would look like

this:

resolver: lts-14.20

flags: {}

extra-package-dbs: []

packages: []

extra-deps: []Most of the common libraries used in everyday development are already

in the Stackage repository. The

extra-deps field can be used to add Hackage dependencies that are not

in the Stackage repository. They are specified by the package and the

version key. For instance, the zenc package could be added

to stack build in the following way:

extra-deps:

- zenc-0.1.1The stack command can be used to install packages and

executables into either the current build environment or the global

environment. For example, the following command installs the executable

for hlint, a popular linting tool for

Haskell, and places it in the PATH:

$ stack install hlintTo check the set of dependencies, run:

$ stack ls dependenciesJust as with cabal, the build and debug process can be

orchestrated using stack commands:

$ stack build # Build a cabal target

$ stack repl # Launch ghci

$ stack ghc # Invoke the standalone compiler in stack environment

$ stack exec bash # Execute a shell command with the stack GHC environment variables

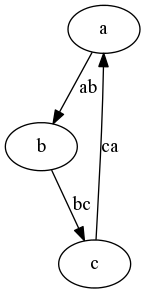

$ stack build --file-watch # Build on every filesystem changeTo visualize the dependency graph, use the dot command piped first into graphviz, then piped again into your favorite image viewer:

$ stack dot --external | dot -Tpng | feh -Hpack

Hpack is an alternative package description language that uses a

structured YAML format to generate Cabal files. Hpack succeeds in DRYing

(Don’t Repeat Yourself) several sections of cabal files that are often

quite repetitive across large projects. Hpack uses a

package.yaml file which is consumed by the command line

tool hpack. Hpack can be integrated into Stack and will

generate resulting cabal files whenever stack build is

invoked on a project using a package.yaml file. The output

cabal file contains a hash of the input yaml file for consistency

checking.

A small package.yaml file might look something like the

following:

name : example

version : 0.1.0

synopsis : My fabulous library

description : My fabulous library

maintainer : John Doe

github : john/example

category : Development

ghc-options: -Wall

dependencies:

- base >= 4.9 && < 5

- protolude

- deepseq

- directory

- filepath

- text

- containers

- unordered-containers

- aeson

- pretty-simple

library:

source-dirs: src

exposed-modules:

- Example

executable:

main: Main.hs

source-dirs: exe

dependencies:

- example

tests:

spec:

main: Test.hs

source-dirs:

- test

- src

dependencies:

- example

- tasty

- tasty-hunitBase

GHC itself ships with a variety of core libraries that are loaded

into all Haskell projects. The most foundational of these is

base which forms the foundation for all Haskell code. The

base library is split across several modules.

- Prelude - The default namespace imported in every module.

- Data - The simple data structures wired into the language

- Control - Control flow functions

- Foreign - Foreign function interface

- Numeric - Numerical tower and arithmetic operations

- System - System operations for Linux/Mac/Windows

- Text - Basic String types.

- Type - Typelevel operations

- GHC - GHC Internals

- Debug - Debug functions

- Unsafe - Unsafe “backdoor” operations

There have been several large changes to Base over the years which have resulted in breaking changes that means older versions of base are not compatible with newer versions.

- Monad Applicative Proposal (AMP)

- MonadFail Proposal (MFP)

- Semigroup Monoid Proposal (SMP)

Prelude

The Prelude is the default standard module. The Prelude is imported by default into all Haskell modules unless either there is an explicit import statement for it, or the NoImplicitPrelude extension is enabled.

The Prelude exports several hundred symbols that are the default datatypes and functions for libraries that use the GHC-issued prelude. Although the Prelude is the default import, many libraries these days do not use the standard prelude instead choosing to roll a custom one on a per-project basis or to use an off-the shelf prelude from Hackage.

The Prelude contains common datatype and classes such as List, Monad, Maybe and most associated functions for manipulating these structures. These are the most foundational programming constructs in Haskell.

Modern Haskell

There are two official language standards:

- Haskell98

- Haskell2010

And then there is what is colloquially referred to as Modern Haskell which is not an official language standard, but an ambiguous term to denote the emerging way most Haskellers program with new versions of GHC. The language features typically included in modern Haskell are not well-defined and will vary between programmers. For instance, some programmers prefer to stay quite close to the Haskell2010 standard and only include a few extensions while some go all out and attempt to do full dependent types in Haskell.

By contrast, the type of programming described by the phrase Modern Haskell typically utilizes some type-level programming, as well as flexible typeclasses, and a handful of Language Extensions.

Flags

GHC has a wide variety of flags that can be passed to configure different behavior in the compiler. Enabling GHC compiler flags grants the user more control in detecting common code errors. The most frequently used flags are:

| Flag | Description |

|---|---|

-fwarn-tabs |

Emit warnings of tabs instead of spaces in the source code |

-fwarn-unused-imports |

Warn about libraries imported without being used |

-fwarn-name-shadowing |

Warn on duplicate names in nested bindings |

-fwarn-incomplete-uni-patterns |

Emit warnings for incomplete patterns in lambdas or pattern bindings |

-fwarn-incomplete-patterns |

Warn on non-exhaustive patterns |

-fwarn-overlapping-patterns |

Warn on pattern matching branches that overlap |

-fwarn-incomplete-record-updates |

Warn when records are not instantiated with all fields |

-fdefer-type-errors |

Turn type errors into warnings |

-fwarn-missing-signatures |

Warn about toplevel missing type signatures |

-fwarn-monomorphism-restriction |

Warn when the monomorphism restriction is applied implicitly |

-fwarn-orphans |

Warn on orphan typeclass instances |

-fforce-recomp |

Force recompilation regardless of timestamp |

-fno-code |

Omit code generation, just parse and typecheck |

-fobject-code |

Generate object code |

Like most compilers, GHC takes the -Wall flag to enable

all warnings. However, a few of the enabled warnings are highly verbose.

For example, -fwarn-unused-do-bind and

-fwarn-unused-matches typically would not correspond to

errors or failures.

Any of these flags can be added to the ghc-options

section of a project’s .cabal file. For example:

ghc-options:

-fwarn-tabs

-fwarn-unused-imports

-fwarn-missing-signatures

-fwarn-name-shadowing

-fwarn-incomplete-patternsThe flags described above are simply the most useful. See the official reference for the complete set of GHC’s supported flags.

For information on debugging GHC internals, see the commentary on GHC internals.

Hackage

Hackage is the upstream source of open source Haskell packages. With Haskell’s continuing evolution, Hackage has become many things to developers, but there seem to be two dominant philosophies of uploaded libraries.

A Repository for Production Libraries

In the first philosophy, libraries exist as reliable, community-supported building blocks for constructing higher level functionality on top of a common, stable edifice. In development communities where this method is the dominant philosophy, the authors of libraries have written them as a means of packaging up their understanding of a problem domain so that others can build on their understanding and expertise.

An Experimental Playground

In contrast to the previous method of packaging, a common philosophy in the Haskell community is that Hackage is a place to upload experimental libraries as a means of getting community feedback and making the code publicly available. Library authors often rationalize putting these kinds of libraries up without documentation, often without indication of what the library actually does or how it works. This unfortunately means a lot of Hackage namespace has become polluted with dead-end, bit-rotting code. Sometimes packages are also uploaded purely for internal use within an organisation, or to accompany an academic paper. These packages are often left undocumented as well.

For developers coming to Haskell from other language ecosystems that favor the former philosophy (e.g., Python, JavaScript, Ruby), seeing thousands of libraries without the slightest hint of documentation or description of purpose can be unnerving. It is an open question whether the current cultural state of Hackage is sustainable in light of these philosophical differences.

Needless to say, there is a lot of very low-quality Haskell code and documentation out there today, so being conservative in library assessment is a necessary skill. That said, there are also quite a few phenomenal libraries on Hackage that are highly curated by many people.

As a general rule, if the Haddock documentation for the library does not have a minimal working example, it is usually safe to assume that it is an RFC-style library and probably should be avoided for production code.

There are several heuristics you can use to answer the question Should I Use this Hackage Library:

- Check the Uploaded to see if the author has updated it in the last five years.

- Check the Maintainer email address, if the author has an academic email address and has not uploaded a package in two or more years, it is safe to assume that this is a thesis project and probably should not be used industrially.

- Check the Modules to see if the author has included toplevel Haddock docstrings. If the author has not included any documentation then the library is likely of low-quality and should not be used industrially.

- Check the Dependencies for the bound on

basepackage. If it doesn’t include the latest base included with the latest version of GHC then the code is likely not actively maintained. - Check the reverse Hackage search to see if the package is used by other libraries in the ecosystem. For example: https://packdeps.haskellers.com/reverse/QuickCheck

An example of a bitrotted package:

https://hackage.haskell.org/package/numeric-quest

An example of a well maintained package:

https://hackage.haskell.org/package/QuickCheck

Stackage

Stackage is an alternative opt-in packaging repository which mirrors a subset of Hackage. Packages that are included in Stackage are built in a massive continuous integration process that checks to see that given versions link successfully against each other. This can give a higher degree of assurance that the bounds of a given resolver ensure compatibility.

Stackage releases are built nightly and there are also long-term stable (LTS) releases. Nightly resolvers have a date convention while LTS releases have a major and minor version. For example:

lts-14.22nightly-2020-01-30

See:

GHCi

GHCi is the interactive shell for the GHC compiler. GHCi is where we will spend most of our time in everyday development. Following is a table of useful GHCi commands.

| Command | Shortcut | Action |

|---|---|---|

:reload |

:r |

Code reload |

:type |

:t |

Type inspection |

:kind |

:k |

Kind inspection |

:info |

:i |

Information |

:print |

:p |

Print the expression |

:edit |

:e |

Load file in system editor |

:load |

:l |

Set the active Main module in the REPL |

:module |

:m |

Add modules to imports |

:add |

:ad |

Load a file into the REPL namespace |

:instances |

:in |

Show instances of a typeclass |

:browse |

:bro |

Browse all available symbols in the REPL namespace |

The introspection commands are an essential part of debugging and interacting with Haskell code:

λ: :type 3

3 :: Num a => aλ: :kind Either

Either :: * -> * -> *λ: :info Functor

class Functor f where

fmap :: (a -> b) -> f a -> f b

(<$) :: a -> f b -> f a

-- Defined in `GHC.Base'

...λ: :i (:)

data [] a = ... | a : [a] -- Defined in `GHC.Types'

infixr 5 :Querying the current state of the global environment in the shell is also possible. For example, to view module-level bindings and types in GHCi, run:

λ: :browse

λ: :show bindingsTo examine module-level imports, execute:

λ: :show imports

import Prelude -- implicit

import Data.Eq

import Control.MonadLanguage extensions can be set at the repl.

:set -XNoImplicitPrelude

:set -XFlexibleContexts

:set -XFlexibleInstances

:set -XOverloadedStringsTo see compiler-level flags and pragmas, use:

λ: :set

options currently set: none.

base language is: Haskell2010

with the following modifiers:

-XNoDatatypeContexts

-XNondecreasingIndentation

GHCi-specific dynamic flag settings:

other dynamic, non-language, flag settings:

-fimplicit-import-qualified

warning settings:

λ: :showi language

base language is: Haskell2010

with the following modifiers:

-XNoDatatypeContexts

-XNondecreasingIndentation

-XExtendedDefaultRulesLanguage extensions and compiler pragmas can be set at the prompt. See the Flag Reference for the vast collection of compiler flag options.

Several commands for the interactive shell have shortcuts:

| Function | |

|---|---|

+t |

Show types of evaluated expressions |

+s |

Show timing and memory usage |

+m |

Enable multi-line expression delimited by

:{ and :}. |

λ: :set +t

λ: []

[]

it :: [a]λ: :set +s

λ: foldr (+) 0 [1..25]

325

it :: Prelude.Integer

(0.02 secs, 4900952 bytes)λ: :set +m

λ: :{

λ:| let foo = do

λ:| putStrLn "hello ghci"

λ:| :}

λ: foo

"hello ghci".ghci.conf

The GHCi shell can be customized globally by defining a configure

file ghci.conf in $HOME/.ghc/ or in the

current working directory as ./.ghci.conf.

For example, we can add a command to use the Hoogle type search from

within GHCi. First, install hoogle:

# run one of these command

$ cabal install hoogle

$ stack install hoogleThen, we can enable the search functionality by adding a command to

our ghci.conf:

:set prompt "λ: "

:def hlint const . return $ ":! hlint \"src\""

:def hoogle \s -> return $ ":! hoogle --count=15 \"" ++ s ++ "\""λ: :hoogle (a -> b) -> f a -> f b

Data.Traversable fmapDefault :: Traversable t => (a -> b) -> t a -> t b

Prelude fmap :: Functor f => (a -> b) -> f a -> f bIt is common community tradition to set the prompt to a colored

λ:

:set prompt "\ESC[38;5;208m\STXλ>\ESC[m\STX "GHC can also be coerced into giving slightly better error messages:

-- Better errors

:set -ferror-spans -freverse-errors -fprint-expanded-synonymsGHCi can also use a pretty printing library to format all output,

which is often much easier to read. For example if your project is

already using the amazing pretty-simple library simply

include the following line in your ghci configuration.

:set -ignore-package pretty-simple -package pretty-simple

:def! pretty \ _ -> pure ":set -interactive-print Text.Pretty.Simple.pPrint"

:prettyAnd the default prelude can also be disabled and swapped for something more sensible:

:seti -XNoImplicitPrelude

:seti -XFlexibleContexts

:seti -XFlexibleInstances

:seti -XOverloadedStrings

import Protolude -- or any other preferred preludeGHCi Performance

For large projects, GHCi with the default flags can use quite a bit of memory and take a long time to compile. To speed compilation by keeping artifacts for compiled modules around, we can enable object code compilation instead of bytecode.

:set -fobject-codeEnabling object code compilation may complicate type inference, since

type information provided to the shell can sometimes be less informative

than source-loaded code. This underspecificity can result in breakage

with some language extensions. In that case, you can temporarily

reenable bytecode compilation on a per module basis with the

-fbyte-code flag.

:set -fbyte-code

:load MyModule.hsIf all you need is to typecheck your code in the interactive shell, then disabling code generation entirely makes reloading code almost instantaneous:

:set -fno-codeEditor Integration

Haskell has a variety of editor tools that can be used to provide interactive development feedback and functionality such as querying types of subexpressions, linting, type checking, and code completion. These are largely provided by the haskell-ide-engine which serves as an editor agnostic backend that interfaces with GHC and Cabal to query code.

Vim

Emacs

VSCode

- haskell-ide-engine - Tab completion plugin

- language-haskell - Syntax highlighting plugin

- ghcid - Interactive error reporting plugin

- hie-server - Jump to definition and tag handling plugin

- hlint - Linting and style-checking plugin

- ghcide - Interactive completion plugin

- ormolu-vscode - Code formatting plugin

Linux Packages

There are several upstream packages for Linux packages which are released by GHC development. The key ones of note for Linux are:

For scripts and operations tools, it is common to include commands to add the following apt repositories, and then use these to install the signed GHC and cabal-install binaries (if using Cabal as the primary build system).

$ sudo add-apt-repository -y ppa:hvr/ghc

$ sudo apt-get update

$ sudo apt-get install -y cabal-install-3.0 ghc-8.8.1It is not advisable to use a Linux system package manager to manage Haskell dependencies. Although this can be done, in practice it is better to use Cabal or Stack to create locally isolated builds to avoid incompatibilities.

Names

Names in Haskell exist within a specific namespace. Names are either unqualified of the form:

nubOr qualified by the module where they come from, such as:

Data.List.nubThe major namespaces are described below with their naming conventions

| Namespace | Convention |

|---|---|

| Modules | Uppercase |

| Functions | Lowercase |

| Variables | Lowercase |

| Type Variables | Lowercase |

| Datatypes | Uppercase |

| Constructors | Uppercase |

| Typeclasses | Uppercase |

| Synonyms | Uppercase |

| Type Families | Uppercase |

Modules

A module consists of a set of imports and exports and when compiled generates an interface which is linked against other Haskell modules. A module may reexport symbols from other modules.

-- A module starts with its export declarations of symbols declared in this file.

module MyModule (myExport1, myExport2) where

-- Followed by a set of imports of symbols from other files

import OtherModule (myImport1, myImport2)

-- Rest of the logic and definitions in the module follow

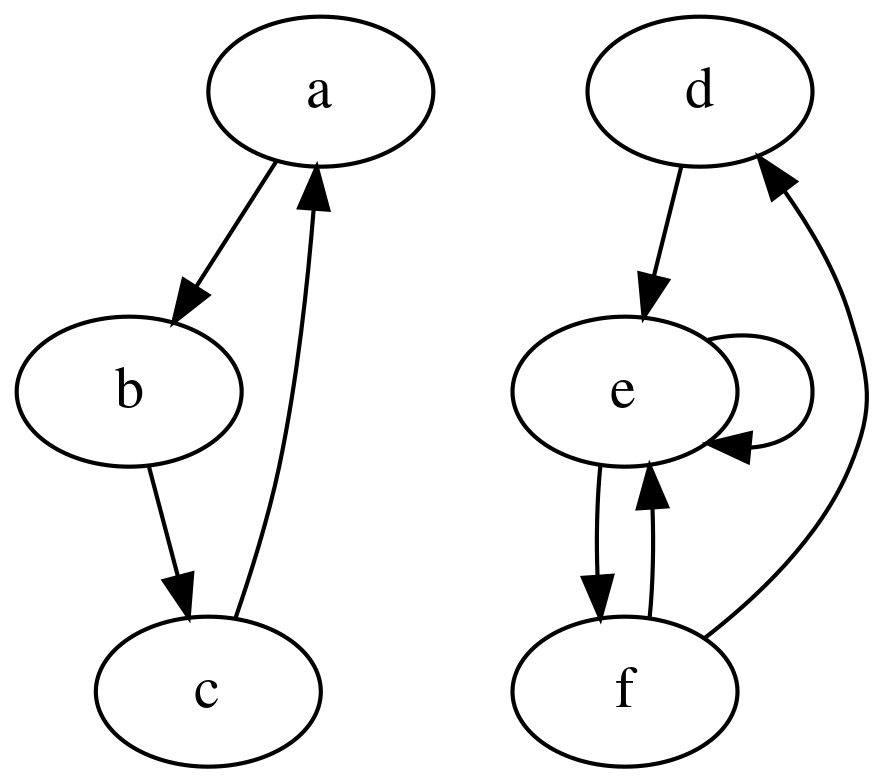

-- ...Modules’ dependency graphs optionally may be cyclic (i.e. they import symbols from each other) through the use of a boot file, but this is often best avoided if at all possible.

Various module import strategies exist. For instance, we may:

Import all symbols into the local namespace.

import Data.ListImport select symbols into the local namespace:

import Data.List (nub, sort)Import into the global namespace masking a symbol:

import Data.List hiding (nub)Import symbols qualified under Data.Map namespace into

the local namespace.

import qualified Data.MapImport symbols qualified and reassigned to a custom namespace

(M, in the example below):

import qualified Data.Map as MYou may also dump multiple modules into the same namespace so long as the symbols do not clash:

import qualified Data.Map as M

import qualified Data.Map.Strict as MA main module is a special module which reserves the name

Main and has a mandatory export of type IO ()

which is invoked when the executable is run.. This is the entry point

from the system into a Haskell program.

module Main where

main = print "Hello World!"Functions

Functions are the central construction in Haskell. A function

f of two arguments x and y can be

defined in a single line as the left-hand and right-hand side of an

equation:

f x y = x + yThis line defines a function named f of two arguments,

which on the right-hand side adds and yields the result. Central to the

idea of functional programming is that computational functions should

behave like mathematical functions. For instance, consider this

mathematical definition of the above Haskell function, which, aside from

the parentheses, looks the same:

f(x,y) = x + y

In Haskell, a function of two arguments need not necessarily be

applied to two arguments. The result of applying only the first argument

is to yield another function to which later the second argument can be

applied. For example, we can define an add function and

subsequently a single-argument inc function, by merely

pre-applying 1 to add:

add x y = x + y

inc = add 1λ: inc 4

5In addition to named functions Haskell also has anonymous lambda functions denoted with a backslash. For example the identity function:

id x = xIs identical to:

id = \x -> xFunctions may call themselves or other functions as arguments; a

feature known as higher-order functions. For example the

following function applies a given argument f, which is

itself a function, to a value x twice.

applyTwice f x = f (f x)Types

Typed functional programming is essential to the modern Haskell paradigm. But what are types precisely?

The syntax of a programming language is described by the constructs that define its types, and its semantics are described by the interactions among those constructs. A type system overlays additional structure on top of the syntax that imposes constraints on the formation of expressions based on the context in which they occur.

Dynamic programming languages associate types with values at evaluation, whereas statically typed languages associate types to expressions before evaluation. Dynamic languages are in a sense as statically typed as static languages, however they have a degenerate type system with only one type.

The dominant philosophy in functional programming is to “make invalid states unrepresentable” at compile-time, rather than performing massive amounts of runtime checks. To this end Haskell has developed a rich type system that is based on typed lambda calculus known as Girard’s System-F (See Rank-N Types) and has incrementally added extensions to support more type-level programming over the years.

The following ground types are quite common:

()- The unit typeChar- A single unicode character (“code point”)Text- Unicode stringsBool- Boolean valuesInt- Machine integersInteger- GMP arbitrary precision integersFloat- Machine floating point valuesDouble- Machine double floating point values

Parameterised types consist of a type and several type parameters indicated as lower case type variables. These are associated with common data structures such as lists and tuples.

[a]– Homogeneous lists with elements of typea(a,b)– Tuple with two elements of typesaandb(a,b,c)– Tuple with three elements of typesa,b, andc

The type system grows quite a bit from here, but these are the foundational types you’ll first encounter. See the later chapters for all types off advanced features that can be optionally turned on.

This tutorial will only cover a small amount of the theory of type systems. For a more thorough treatment of the subject there are two canonical texts:

- Pierce, B. C., & Benjamin, C. (2002). Types and Programming Languages. MIT Press.

- Harper, R. (2016). Practical Foundations for Programming Languages. Cambridge University Press.

Type Signatures

A toplevel Haskell function consists of two lines. The value-level definition which is a function name, followed by its arguments on the left-hand side of the equals sign, and then the function body which computes the value it yields on the right-hand side:

myFunction x y = x ^ 2 + y ^ 2

-- ^ ^ ^ ^^^^^^^^^^^^^

-- | | | |

-- | | | +-- function body

-- | | +------ second argument

-- | +-------- first argument

-- +-------------- functionThe type-level definition is the function name followed by the type of its arguments separated by arrows, and the final term is the type of the entire function body, meaning the type of value yielded by the function itself.

myFunction :: Int -> Int -> Int

-- ^ ^ ^ ^^^^^

-- | | | |

-- | | | +- return type

-- | | +------ second argument

-- | +------------ first argument

-- +----------------------- functionHere is a simple example of a function which adds two integers.

add :: Integer -> Integer -> Integer

add x y = x + yFunctions are also capable of invoking other functions inside of their function bodies:

inc :: Integer -> Integer

inc = add 1The simplest function, called the identity function, is a function which takes a single value and simply returns it back. This is an example of a polymorphic function since it can handle values of any type. The identity function works just as well over strings as over integers.

id :: a -> a

id x = xThis can alternatively be written in terms of an anonymous lambda function which is a backslash followed by a space-separated list of arguments, followed by a function body.

id :: a -> a

id = \x -> xOne of the big ideas in functional programming is that functions are

themselves first class values which can be passed to other functions as

arguments themselves. For example the applyTwice function

takes an argument f which is of type

(a -> a) and it applies that function over a given value

x twice and yields the result. applyTwice is a

higher-order function which will transform one function into another

function.

applyTwice :: (a -> a) -> a -> a

applyTwice f x = f (f x)Often to the left of a type signature you will see a big arrow

=> which denotes a set of constraints

over the type signature. Each of these constraints will be in uppercase

and will normally mention at least one of the type variables on the

right hand side of the arrow. These constraints can mean many things but

in the simplest form they denote that a type variable must have an

implementation of a type class. The

add function below operates over any two similar values

x and y, but these values must have a

numerical interface for adding them together.

add :: (Num a) => a -> a -> a

add x y = x + yType signatures can also appear at the value level in the form of explicit type signatures which are denoted in parentheses.

add1 :: Int -> Int

add1 x = x + (1 :: Int)These are sometimes needed to provide additional hints to the typechecker when specific terms are ambiguous to the typechecker, or when additional language extensions have been enabled which don’t have precise inference methods for deducing all type variables.

Currying

In other languages functions normally have an arity which prescribes the number of arguments a function can take. Some languages have fixed arity (like Fortran) others have flexible arity (like Python) where a variable of number of arguments can be passed. Haskell follows a very simple rule: all functions in Haskell take a single argument. For multi-argument functions (some of which we’ve already seen), arguments will be individually applied until the function is saturated and the function body is evaluated.

For example, the add function from above can be partially applied to produce an add1 function:

add :: Int -> Int -> Int

add x y = x + y

add1 :: Int -> Int

add1 = add 1Uncurrying is the process of taking a function which takes two arguments and transforming it into a function which takes a tuple of arguments. The Haskell prelude includes both a curry and an uncurry function for transforming functions into those that take multiple arguments from those that take a tuple of arguments and vice versa:

curry :: ((a, b) -> c) -> a -> b -> c

uncurry :: (a -> b -> c) -> (a, b) -> cFor example, uncurry applied to the add function creates a function that takes a tuple of integers:

uncurryAdd :: (Int, Int) -> Int

uncurryAdd = uncurry add

example :: Int

example = uncurryAdd (1,2)Algebraic Datatypes

Custom datatypes in Haskell are defined with the data

keyword followed by the the type name, its parameters, and then a set of

constructors. The possible constructors are either sum

types or of product types. All datatypes in Haskell can be

expressed as sums of products. A sum type is a set of options that is

delimited by a pipe.

A datatype can only ever be inhabited by a single value from a sum

type and intuitively models a set of “options” a value may take. While a

product type is a combination of a set of typed values, potentially

named by record fields. For example the following are two definitions of

a Point product type, the latter with two fields x and

y.

data Point = Point Int Int

data Point = Point { x :: Int, y :: Int }As another example: A deck of common playing cards could be modeled by the following set of product and sum types:

data Suit = Clubs | Diamonds | Hearts | Spades

data Color = Red | Black

data Value

= Two

| Three

| Four

| Five

| Six

| Seven

| Eight

| Nine

| Ten

| Jack

| Queen

| King

| Ace

deriving (Eq, Ord)A record type can use these custom datatypes to define all the parameters that define an individual playing card.

data Card = Card

{ suit :: Suit

, color :: Color

, value :: Value

}Some example values:

queenDiamonds :: Card

queenDiamonds = Card Diamonds Red Queen

-- Alternatively

queenDiamonds :: Card

queenDiamonds = Card { suit = Diamonds, color = Red, value = Queen }The problem with the definition of this datatype is that it admits

several values which are malformed. For instance it is possible to

instantiate a Card with a suit Hearts but with

color Black which is an invalid value. The convention for

preventing these kind of values in Haskell is to limit the export of

constructors in a module and only provide a limited set of functions

which the module exports, which can enforce these constraints. These are

smart constructors and an extremely common pattern in

Haskell library design. For example we can export functions for building

up specific suit cards that enforce the color invariant.

module Cards (Card, diamond, spade, heart, club) where

diamond :: Value -> Card

diamond = Card Diamonds Red

spade :: Value -> Card

spade = Card Spades Black

heart :: Value -> Card

heart = Card Hearts Red

club :: Value -> Card

club = Card Clubs BlackDatatypes may also be recursive, in the sense that they can contain themselves as fields. The most common example is a linked list which can be defined recursively as either an empty list or a value linked to a potentially nested version of itself.

data List a = Nil | List a (List a)An example value would look like:

list :: List Integer

list = List 1 (List 2 (List 3 Nil))Constructors for datatypes can also be defined as infix symbols. This

is somewhat rare, but is sometimes used in more math heavy libraries.

For example the constructor for our list type could be defined as the

infix operator :+:. When the value is printed using a Show

instance, the operator will be printed in infix form.

data List a = Nil | a :+: (List a)Lists

Linked lists or cons lists are a fundamental data structure

in functional programming. GHC has builtin syntactic sugar in the form

of list syntax which allows us to write lists that expand into explicit

invocations of the cons operator (:). The operator

is right associative and an example is shown below:

[1,2,3] = 1 : 2 : 3 : []

[1,2,3] = 1 : (2 : (3 : [])) -- with explicit parensThis syntax also extends to the typelevel where lists are represented as brackets around the type of values they contain.

myList1 :: [Int]

myList1 = [1,2,3]

myList2 :: [Bool]

myList2 = [True, True, False]The cons operator itself has the type signature which takes a head element as its first argument and a tail argument as its second.

(:) :: a -> [a] -> [a]The Data.Listmodule from the standard Prelude defines a

variety of utility functions for operations over linked lists. For

example the length function returns the integral length of

the number of elements in the linked list.

length :: [a] -> IntWhile the take function extracts a fixed number of

elements from the list.

take :: Int -> [a] -> [a]Both of these functions are pure and return a new list without modifying the underlying list passed as an argument.

Another function iterate is an example of a function

which returns an infinite list. It takes as its first argument

a function and then repeatedly applies that function to produce a new

element of the linked list.

iterate :: (a -> a) -> a -> [a]Consuming these infinite lists can be used as a control flow construct to construct loops. For example instead of writing an explicit loop, as we would in other programming languages, we instead construct a function which generates list elements. For example producing a list which produces subsequent powers of two:

powersOfTwo = iterate (2*) 1We can then use the take function to evaluate this

lazy stream to a desired depth.

λ: take 15 powersOfTwo

[1,2,4,8,16,32,64,128,256,512,1024,2048,4096,8192,16384]An equivalent loop in an imperative language would look like the following.

def powersOfTwo(n):

square_list = [1]

for i in range(1,n+1):

square_list.append(2 ** i)

return square_list

print(powersOfTwo(15))Pattern Matching

To unpack an algebraic datatype and extract its fields we’ll use a

built in language construction known as pattern matching. This

is denoted by the case syntax and scrutinizes a

specific value. A case expression will then be followed by a sequence of

matches which consist of a pattern on the left and an

arbitrary expression on the right. The left patterns will all consist of

constructors for the type of the scrutinized value and should enumerate

all possible constructors. For product type patterns that are

scrutinized a sequence of variables will bind the fields associated with

its positional location in the constructor. The types of all expressions

on the right hand side of the matches must be identical.

Pattern matches can be written in explicit case statements or in toplevel functional declarations. The latter simply expands the former in the desugaring phase of the compiler.

data Example = Example Int Int Int

example1 :: Example -> Int

example1 x = case x of

Example a b c -> a + b + c

example2 :: Example -> Int

example2 (Example a b c) = a + b +cFollowing on the playing card example in the previous section, we could use a pattern to produce a function which scores the face value of a playing card.

value :: Value -> Integer

value card = case card of

Two -> 2

Three -> 3

Four -> 4

Five -> 5

Six -> 6

Seven -> 7

Eight -> 8

Nine -> 9

Ten -> 10

Jack -> 10

Queen -> 10

King -> 10

Ace -> 1And we can use a double pattern match to produce a function which

determines which suit trumps another suit. For example we can introduce

an order of suits of cards where the ranking of cards proceeds (Clubs,

Diamonds, Hearts, Spaces). A _ underscore used inside a

pattern indicates a wildcard pattern and matches against any constructor

given. This should be the last pattern used a in match list.

suitBeats :: Suit -> Suit -> Bool

suitBeats Clubs Diamonds = True

suitBeats Clubs Hearts = True

suitBeats Clubs Spaces = True

suitBeats Diamonds Hearts = True

suitBeats Diamonds Spades = True

suitBeats Hearts Spades = True

suitBeats _ _ = FalseAnd finally we can write a function which determines if another card beats another card in terms of the two pattern matching functions above. The following pattern match brings the values of the record into the scope of the function body assigning to names specified in the pattern syntax.

beats :: Card -> Card -> Bool

beats (Card suit1 color1 value1) (Card suit2 color2 value2) =

(suitBeats suit1 suit2) && (value1 > value2)Functions may also invoke themselves. This is known as recursion. This is quite common in pattern matching definitions which recursively tear down or build up data structures. This kind of pattern is one of the defining modes of programming functionally.

The following two recursive pattern matches are desugared forms of each other:

fib :: Integer -> Integer

fib 0 = 0

fib 1 = 1

fib n = fib (n-1) + fib (n-2)fib :: Integer -> Integer

fib m = case m of

0 -> 0

1 -> 1

n -> fib (n-1) + fib(n-2)Pattern matching on lists is also an extremely common pattern. It has

special pattern syntax and the tail variable is typically pluralized. In

the following x denotes the head variable and

xs denotes the tail. For example the following function

traverses a list of integers and adds (+1) to each

value.

addOne :: [Int] -> [Int]

addOne (x : xs) = (x+1) : (addOne xs)

addOne [] = []Guards

Guard statements are expressions that evaluate to boolean values that

can be used to restrict pattern matches. These occur in a pattern match

statements at the toplevel with the pipe syntax on the left indicating

the guard condition. The special otherwise condition is

just a renaming of the boolean value True exported from

Prelude.

absolute :: Int -> Int

absolute n

| n < 0 = (-n)

| otherwise = nGuards can also occur in pattern case expressions.

absoluteJust :: Maybe Int -> Maybe Int

absoluteJust n = case n of

Nothing -> Nothing

Just n

| n < 0 -> Just (-n)

| otherwise -> Just nOperators and Sections

An operator is a function that can be applied using infix syntax or partially applied using a section. Operators can be defined to use any combination of the special ASCII symbols or any unicode symbol.

! # % &

* + . /

< = > ?

@ \ ^ |

- ~ :

The following are reserved syntax and cannot be overloaded:

.. : :: =

\ | <- ->

@ ~ =>

Operators are of one of three fixity classes.

- Infix - Place between expressions

- Prefix - Placed before expressions

- Postfix - Placed after expressions. See Postfix Operators.

Expressions involving infix operators are disambiguated by the operator’s fixity and precedence. Infix operators are either left or right associative. Associativity determines how operators of the same precedence are grouped in the absence of parentheses.

a + b + c + d = ((a + b) + c) + d -- left associative

a + b + c + d = a + (b + (c + d)) -- right associativePrecedence and associativity are denoted by fixity declarations for

the operator using either infixr infixl and

infix. The standard operators defined in the Prelude have

the following precedence table.

infixr 9 .

infixr 8 ^, ^^, **

infixl 7 *, /, `quot`, `rem`, `div`, `mod`

infixl 6 +, -

infixr 5 ++

infix 4 ==, /=, <, <=, >=, >

infixr 3 &&

infixr 2 ||

infixr 1 >>, >>=

infixr 0 $, `seq`Sections are written as ( op e ) or

( e op ). For example:

(+1) 3

(1+) 3Operators written within enclosed parens are applied like traditional functions. For example the following are equivalent:

(+) x y = x + yTuples

Tuples are heterogeneous structures which contain a fixed number of values. Some simple examples are shown below:

-- 2-tuple

tuple2 :: (Integer, String)

tuple2 = (1, "foo")

-- 3-tuple

tuple3 :: (Integer, Integer, Integer)

tuple3 = (10, 20, 30)For two-tuples there are two functions fst and

snd which extract the left and right values

respectively.

fst :: (a,b) -> a

snd :: (a,b) -> bGHC supports tuples to size 62.

Where & Let Clauses

Haskell syntax contains two different types of declaration syntax:

let and where. A let binding is an expression

and binds anywhere in its body. For example the following let binding

declares x and y in the expression

x+y.

f = let x = 1; y = 2 in (x+y)A where binding is a toplevel syntax construct (i.e. not an

expression) that binds variables at the end of a function. For example

the following binds x and y in the function

body of f which is x+y.

f = x+y where x=1; y=1Where clauses following the Haskell layout rule where definitions can be listed on newlines so long as the definitions have greater indentation than the first toplevel definition they are bound to.

f = x+y

where

x = 1

y = 1Conditionals

Haskell has builtin syntax for scrutinizing boolean expressions.

These are first class expressions known as if statements.

An if statement is of the form

if cond then trueCond else falseCond. Both the

True and False statements must be present.

absolute :: Int -> Int

absolute n =

if (n < 0)

then (-n)

else nIf statements are just syntactic sugar for case

expressions over boolean values. The following example is equivalent to

the above example.

absolute :: Int -> Int

absolute n = case (n < 0) of

True -> (-n)

False -> nFunction Composition

Functions are obviously at the heart of functional programming. In mathematics function composition is an operation which takes two functions and produces another function with the result of the first argument function applied to the result of the second function. This is written in mathematical notation as:

g ∘ f

The two functions operate over a domain. For example X, Y and Z.

f : X → Y g : Y → Z

Or in Haskell notation:

f :: X -> Y

g :: Y -> ZComposition operation results in a new function:

g ∘ f : X → Z

In Haskell this operator is given special infix operator to appear

similar to the mathematical notation. Intuitively it takes two functions

of types b -> c and a -> b and composes

them together to produce a new function. This is the canonical example

of a higher-order function.

(.) :: (b -> c) -> (a -> b) -> a -> c

f . g = \x -> f (g x)Haskell code will liberally use this operator to compose chains of

functions. For example the following composes a chain of list processing

functions sort, filter and

map:

example :: [Integer] -> [Integer]

example =

sort

. filter (<100)

. map (*10)Another common higher-order function is the flip

function which takes as its first argument a function of two arguments,

and reverses the order of these two arguments returning a new

function.

flip :: (a -> b -> c) -> b -> a -> cThe most common operator in all of Haskell is the function

application operator $. This function is right associative

and takes the entire expression on the right hand side of the operator

and applies it to a function on the left.

infixr 0 $

($) :: (a -> b) -> a -> b This is quite often used in the pattern where the left hand side is a composition of other functions applied to a single argument. This is common in point-free style of programming which attempts to minimize the number of input arguments in favour of pure higher order function composition. The flipped form of this function does the opposite and is left associative, and applies the entire left hand side expression to a function given in the second argument to the function.

infixl 1 &

(&) :: a -> (a -> b) -> b For comparison consider the use of $, &

and explicit parentheses.

ex1 = f1 . f2 . f3 . f4 $ input -- with ($)

ex1 = input & f1 . f2 . f3 . f4 -- with (&)

ex1 = (f1 . f2 . f3 . f4) input -- with explicit parensThe on function takes a function b and

yields the result of applying unary function u to two

arguments x and y. This is a higher order

function that transforms two inputs and combines the outputs.

on :: (b -> b -> c) -> (a -> b) -> a -> a -> c This is used quite often in sort functions. For example we can write a custom sort function which sorts a list of lists based on length.

λ: import Data.List

λ: sortSize = sortBy (compare `on` length)

λ: sortSize [[1,2], [1,2,3], [1]]

[[1],[1,2],[1,2,3]]List Comprehensions

List comprehensions are a syntactic construct that first originated in the Haskell language and has now spread to other programming languages. List comprehensions provide a simple way of working with lists and sequences of values that follow patterns. List comprehension syntax consists of three components:

- Generators - Expressions which evaluate a list of values which are iteratively added to the result.

- Let bindings - Expressions which generate a constant value which is scoped on each iteration.

- Guards - Expressions which generate a boolean expression which determine whether an iteration is added to the result.

The simplest generator is simply a list itself. The following example produces a list of integral values, each element multiplied by two.

λ: [2*x | x <- [1,2,3,4,5]]

-- ^^^^^^^^^^^^^^^^

-- Generator

[2,4,6,8,10]We can extend this by adding a let statement which generalizes the

multiplier on each step and binds it to a variable n.

λ: [n*x | x <- [1,2,3,4,5], let n = 3]

-- ^^^^^^^^^

-- Let binding

[3,6,9,12,15]And we can also restrict the set of resulting values to only the

subset of values of x that meet a condition. In this case

we restrict to only values of x which are odd.

λ: [n*x | x <- [1,2,3,4,5], let n = 3, odd x]

-- ^^^^^

-- Guard

[3,9,15]Comprehensions with multiple generators will combine each generator pairwise to produce the cartesian product of all results.

λ: [(x,y) | x <- [1,2,3], y <- [10,20,30]]

[(1,10),(1,20),(1,30),(2,10),(2,20),(2,30),(3,10),(3,20),(3,30)]

λ: [(x,y,z) | x <- [1,2], y <- [10,20], z <- [100,200]]

[(1,10,100),(1,10,200),(1,20,100),(1,20,200),(2,10,100),(2,10,200),(2,20,100),(2,20,200)]Haskell has builtin comprehension syntax which is syntactic sugar for

specific methods of the Enum typeclass.

| Syntax Sugar | Enum Class Method |

|---|---|

[ e1.. ] |

enumFrom e1 |

[ e1,e2.. ] |

enumFromThen e1 e2 |

[ e1..e3 ] |

enumFromTo e1 e3 |

[ e1,e2..e3 ] |

enumFromThenTo e1 e2 e3 |

There is an Enum instance for Integer and

Char types and so we can write list comprehensions for

both, which generate ranges of values.

λ: [1 .. 15]

[1,2,3,4,5,6,7,8,9,10,11,12,13,14,15]

λ: ['a' .. 'z']

"abcdefghijklmnopqrstuvwxyz"

λ: [1,3 .. 15]

[1,3,5,7,9,11,13,15]

λ: [0,50..500]

[0,50,100,150,200,250,300,350,400,450,500]These comprehensions can be used inside of function definitions and

reference locally bound variables. For example the

factorial function (written as n!) is defined as the product of all

positive integers up to a given value.

factorial :: Integer -> Integer

factorial n = product [1..n]As a more complex example consider a naive prime number sieve:

primes :: [Integer]

primes = sieve [2..]

where

sieve (p:xs) = p : sieve [ n | n <- xs, n `mod` p > 0 ]And a more complex example, consider the classic FizzBuzz interview question. This makes use of iteration and guard statements.

fizzbuzz :: [String]

fizzbuzz = [fb x| x <- [1..100]]

where fb y

| y `mod` 15 == 0 = "FizzBuzz"

| y `mod` 3 == 0 = "Fizz"

| y `mod` 5 == 0 = "Buzz"

| otherwise = show yComments

Single line comments begin with double dashes --:

-- Everything should be built top-down, except the first time.Multiline comments begin with {- and end with

-}.

{-

The goal of computation is the emulation of our synthetic abilities, not the

understanding of our analytic ones.

-}Comments may also add additional structure in the form of Haddock docstrings. These comments will begin with a pipe.

{-|

Great ambition without contribution is without significance.

-}Modules may also have a comment convention which describes the individual authors, copyright and stability information in the following form:

{-|

Module : MyEnterpriseModule

Description : Make it so.

Copyright : (c) Jean Luc Picard

License : MIT

Maintainer : jl@enterprise.com

Stability : experimental

Portability : POSIX

Description of module structure in Haddock markup style.

-}Typeclasses

Typeclasses are one of the core abstractions in Haskell. Just as we

wrote polymorphic functions above which operate over all given types

(the id function is one example), we can use typeclasses to

provide a form of bounded polymorphism which constrains type variables

to a subset of those types that implement a given class.

For example we can define an equality class which allows us to define an overloaded notion of equality depending on the data structure provided.

class Equal a where

equal :: a -> a -> BoolThen we can define this typeclass over several different types. These

definitions are called typeclass instances. For example

for the Bool type the equality typeclass would be defined

as:

instance Equal Bool where

equal True True = True

equal False False = True

equal True False = False

equal False True = FalseOver the unit type, where only a single value exists, the instance is trivial:

instance Equal () where

equal () () = TrueFor the Ordering type, defined as:

data Ordering = LT | EQ | GTWe would have the following Equal instance:

instance Equal Ordering where

equal LT LT = True

equal EQ EQ = True

equal GT GT = True

equal _ _ = FalseAn Equal instance for a more complex data structure like the list

type relies upon the fact that the type of the elements in the list must

also have a notion of equality, so we include this as a constraint in

the typeclass context, which is written to the left of the fat arrow

=>. With this constraint in place, we can write this

instance recursively by pattern matching on the list elements and

checking for equality all the way down the spine of the list:

instance (Equal a) => Equal [a] where

equal [] [] = True -- Empty lists are equal

equal [] ys = False -- Lists of unequal size are not equal

equal xs [] = False

-- equal x y is only allowed here due to the constraint (Equal a)

equal (x:xs) (y:ys) = equal x y && equal xs ysIn the above definition, we know that we can check for equality between individual list elements if those list elements satisfy the Equal constraint. Knowing that they do, we can then check for equality between two complete lists.

For tuples, we will also include the Equal constraint for their

elements, and we can then check each element for equality respectively.

Note that this instance includes two constraints in the context of the

typeclass, requiring that both type variables a and

b must also have an Equal instance.

instance (Equal a, Equal b) => Equal (a,b) where

equal (x0, x1) (y0, y1) = equal x0 y0 && equal x1 y1The default prelude comes with a variety of typeclasses that are used frequently and defined over many prelude types:

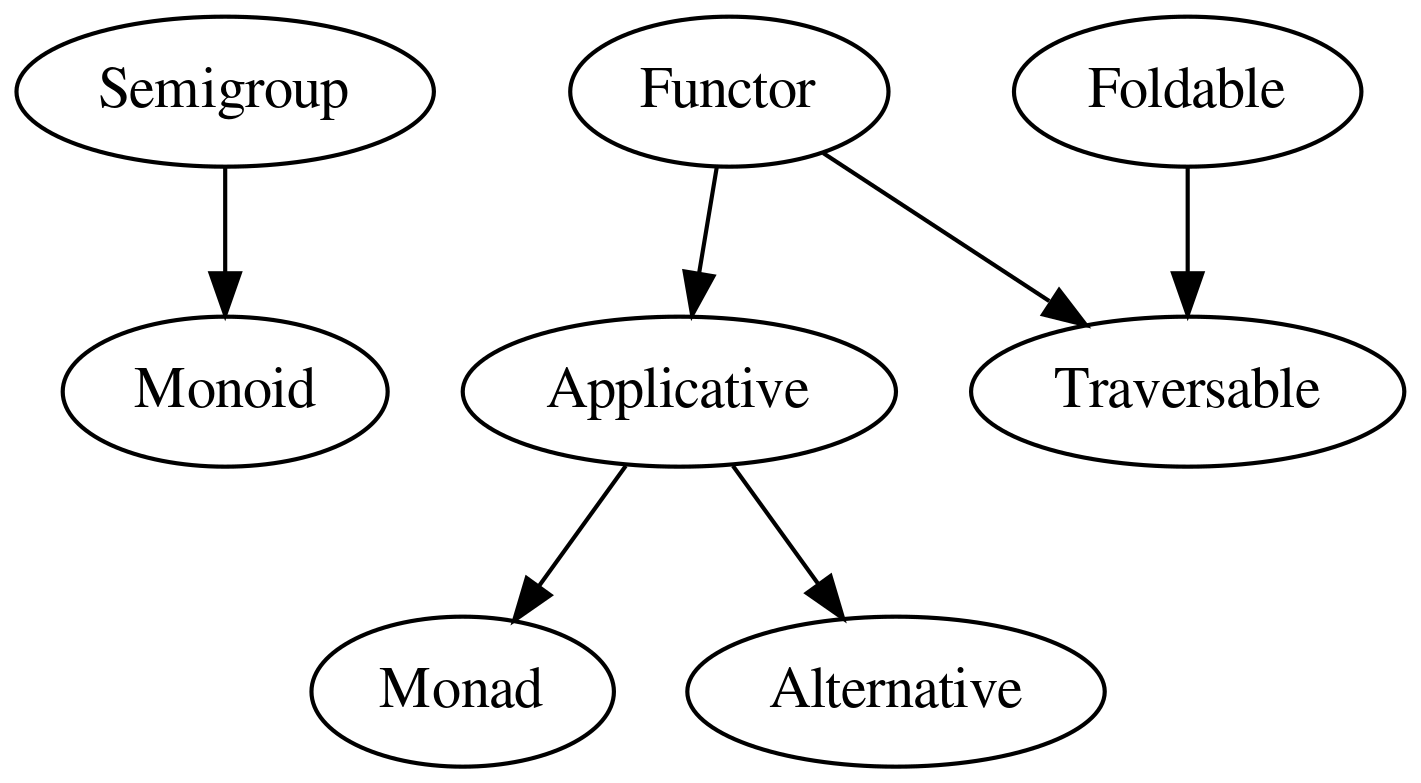

- Num - Provides a basic numerical interface for values with addition, multiplication, subtraction, and negation.

- Eq - Provides an interface for values that can be tested for equality.

- Ord - Provides an interface for values that have a total ordering.

- Read - Provides an interface for values that can be read from a string.

- Show - Provides an interface for values that can be printed to a string.

- Enum - Provides an interface for values that are enumerable to integers.